K8s 部署Prometheus (一)

K8s 部署Prometheus (一)

# prometheus 介绍

# K8s 部署Prometheus (一)

# 部署kube-state-metrics服务

kube-state-metrics能够采集绝大多数k8s内置资源的相关数据,例如pod,deploy,service等等,同时它也提供自己的数据,主要是资源采集个数和采集发生的异常次数统计

- 拉取镜像,上传到私服

提示:不建议用最新的

docker pull quay.io/coreos/kube-state-metrics:v1.5.0

docker tag quay.io/coreos/kube-state-metrics:v1.5.0 harbor.yfklife.cn/public/kube-state-metrics:v1.5.0

docker push harbor.yfklife.cn/public/kube-state-metrics:v1.5.0

2

3

# 准备资源配置

test -d /opt/application/prometheus/ || mkdir -p /opt/application/prometheus/ && cd /opt/application/prometheus/

- 配置deployment

vi /opt/application/prometheus/kube-state-metrics-deployment.yaml

apiVersion: apps/v1beta2

# Kubernetes versions after 1.9.0 should use apps/v1

# Kubernetes versions before 1.8.0 should use apps/v1beta1 or extensions/v1beta1

kind: Deployment

metadata:

name: kube-state-metrics

namespace: kube-system

labels:

app: kube-state-metrics

grafanak8sapp: "true"

spec:

selector:

matchLabels:

app: kube-state-metrics

grafanak8sapp: "true"

replicas: 1

strategy:

rollingUpdate:

maxSurge: 25%

maxUnavailable: 25%

type: RollingUpdate

template:

metadata:

labels:

app: kube-state-metrics

grafanak8sapp: "true"

spec:

serviceAccountName: kube-state-metrics

containers:

- name: kube-state-metrics

#image: harbor.yfklife.cn/public/kube-state-metrics:v1.9.8

image: harbor.yfklife.cn/public/kube-state-metrics:v1.5.0

imagePullPolicy: IfNotPresent

ports:

- name: http-metrics

containerPort: 8080

protocol: TCP

#- name: telemetry

# containerPort: 8081

readinessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 8080

scheme: HTTP

initialDelaySeconds: 5

timeoutSeconds: 5

successThreshold: 1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

- 配置rbac

vi kube-state-metrics-rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/cluster-service: "true"

name: kube-state-metrics

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

# kubernetes versions before 1.8.0 should use rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

name: kube-state-metrics

labels:

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/cluster-service: "true"

rules:

- apiGroups: [""]

resources:

- configmaps

- secrets

- nodes

- pods

- services

- resourcequotas

- replicationcontrollers

- limitranges

- persistentvolumeclaims

- persistentvolumes

- namespaces

- endpoints

verbs: ["list", "watch"]

- apiGroups: ["extensions"]

resources:

- daemonsets

- deployments

- replicasets

verbs: ["list", "watch"]

- apiGroups: ["apps"]

resources:

- statefulsets

verbs: ["list", "watch"]

- apiGroups: ["batch"]

resources:

- cronjobs

- jobs

verbs: ["list", "watch"]

- apiGroups: ["autoscaling"]

resources:

- horizontalpodautoscalers

verbs: ["list", "watch"]

- apiGroups: ["policy"]

resources:

- poddisruptionbudgets

verbs: ["list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

# kubernetes versions before 1.8.0 should use rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: kube-state-metrics

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: kube-state-metrics

subjects:

- kind: ServiceAccount

name: kube-state-metrics

namespace: kube-system

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

- 创建资源

kubectl apply -f kube-state-metrics-rbac.yaml

kubectl apply -f kube-state-metrics-deployment.yaml

kubectl get all -n kube-system |grep metrics #检查容器是否正常运行

2

3

4

# 部署node-exporter服务

dockerhub镜像地址 (opens new window)

- 拉取镜像,上传到私服

docker pull prom/node-exporter:v0.15.0

docker tag prom/node-exporter:v0.15.0 harbor.yfklife.cn/public/node-exporter:v0.15.0

docker push harbor.yfklife.cn/public/node-exporter:v0.15.0

2

3

4

# 准备资源配置

test -d /opt/application/prometheus/ || mkdir -p /opt/application/prometheus/ && cd /opt/application/prometheus/

- 配置 Daemonset.yml

vi node-exporter-daemonset.yaml

kind: DaemonSet

apiVersion: extensions/v1beta1

metadata:

name: node-exporter

namespace: kube-system

labels:

daemon: "node-exporter"

spec:

selector:

matchLabels:

daemon: "node-exporter"

template:

metadata:

name: node-exporter

labels:

daemon: "node-exporter"

grafanak8sapp: "true"

spec:

volumes:

- name: proc

hostPath:

path: /proc

type: ""

- name: sys

hostPath:

path: /sys

type: ""

containers:

- name: node-exporter

image: harbor.yfklife.cn/public/node-exporter:v0.15.0

imagePullPolicy: IfNotPresent

args:

- --path.procfs=/host_proc

- --path.sysfs=/host_sys

ports:

- name: node-exporter

hostPort: 9100

containerPort: 9100

protocol: TCP

volumeMounts:

- name: sys

readOnly: true

mountPath: /host_sys

- name: proc

readOnly: true

mountPath: /host_proc

hostNetwork: true

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

- 创建资源

kubectl apply -f node-exporter-daemonset.yaml

kubectl get all -n kube-system -owide|grep node-exporter #检查容器是否正常运行

2

3

# 部署cadvisor服务

k8s集群在1.9以前默认是集成在一起的

dockerhub镜像地址 (opens new window)

拉取镜像,上传到私服

cadvisor对系统内核版本要求比较高,选择系统内核兼容的版本 [root@hdss14-21 prometheus]# uname -a Linux hdss14-21 3.10.0-693.el7.x86_64 #1 SMP Tue Aug 22 21:09:27 UTC 2017 x86_64 x86_64 x86_64 GNU/Linux

docker pull google/cadvisor:v0.31.0

docker tag google/cadvisor:v0.31.0 harbor.yfklife.cn/public/cadvisor:v0.31.0

docker push harbor.yfklife.cn/public/cadvisor:v0.31.0

2

3

# 准备资源配置

test -d /opt/application/prometheus/ || mkdir -p /opt/application/prometheus/ && cd /opt/application/prometheus/

- 配置 Daemonset.yml

vi cadvisor-daemonset.yaml

apiVersion: apps/v1 # for Kubernetes versions before 1.9.0 use apps/v1beta2

kind: DaemonSet

metadata:

name: cadvisor

namespace: kube-system

annotations:

seccomp.security.alpha.kubernetes.io/pod: 'docker/default'

spec:

selector:

matchLabels:

name: cadvisor

template:

metadata:

labels:

name: cadvisor

spec:

hostNetwork: true

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

containers:

- name: cadvisor

image: harbor.yfklife.cn/public/cadvisor:v0.31.0

imagePullPolicy: IfNotPresent

resources:

requests:

memory: 200Mi

cpu: 150m

limits:

cpu: 300m

volumeMounts:

- name: rootfs

mountPath: /rootfs

readOnly: true

- name: var-run

mountPath: /var/run

readOnly: true

- name: sys

mountPath: /sys

readOnly: true

- name: docker

mountPath: /var/lib/docker

readOnly: true

ports:

- name: http

containerPort: 4194

protocol: TCP

readinessProbe:

tcpSocket:

port: 4194

initialDelaySeconds: 5

periodSeconds: 10

args:

- --housekeeping_interval=10s

- --port=4194

automountServiceAccountToken: false

terminationGracePeriodSeconds: 30

volumes:

- name: rootfs

hostPath:

path: /

- name: var-run

hostPath:

path: /var/run

- name: sys

hostPath:

path: /sys

- name: docker

hostPath:

path: /data/docker

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

如果需要添加污点不调度,执行指定节点

kubectl describe nodes hdss14-21.host.com

kubectl taint nodes hdss14-21.host.com node-role.kubernetes.io/master=master:NoSchedule #添加, key=value:动作(查看帮助:kubectl taint -)

kubectl taint node hdss14-21.host.com node-role.kubernetes.io/master- #删除

2

3

- 修改运行节点软链接

mount -o remount,rw /sys/fs/cgroup/

ln -s /sys/fs/cgroup/cpu,cpuacct/ /sys/fs/cgroup/cpuacct,cpu

ll /sys/fs/cgroup/ |grep cpu

2

3

- 创建资源

kubectl apply -f cadvisor-daemonset.yaml

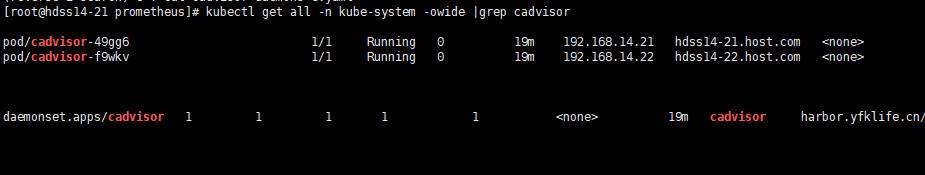

kubectl get all -n kube-system -owide |grep cadvisor #检查容器是否正常运行

2

3

# 部署blackbox-exporter服务

dockerhub镜像地址 (opens new window)

docker pull prom/blackbox-exporter:v0.19.0

docker tag prom/blackbox-exporter:v0.19.0 harbor.yfklife.cn/public/blackbox-exporter:v0.19.0

docker push harbor.yfklife.cn/public/blackbox-exporter:v0.19.0

2

3

# 准备资源配置

test -d /opt/application/prometheus/ || mkdir -p /opt/application/prometheus/ && cd /opt/application/prometheus/

- 配置 configmap.yaml

vi blackbox-exporter-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

labels:

app: blackbox-exporter

name: blackbox-exporter

namespace: kube-system

data:

blackbox.yml: |-

modules:

http_2xx:

prober: http

timeout: 2s

http:

valid_http_versions: ["HTTP/1.1", "HTTP/2"]

valid_status_codes: [200,301,302]

method: GET

preferred_ip_protocol: "ip4"

tcp_connect:

prober: tcp

timeout: 2s

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

- 配置 deployment.yaml

vi blackbox-exporter-deployment.yaml

kind: Deployment

apiVersion: apps/v1

metadata:

name: blackbox-exporter

namespace: kube-system

labels:

app: blackbox-exporter

annotations:

deployment.kubernetes.io/revision: 1

spec:

replicas: 1

selector:

matchLabels:

app: blackbox-exporter

template:

metadata:

labels:

app: blackbox-exporter

spec:

volumes:

- name: config

configMap:

name: blackbox-exporter

defaultMode: 420

containers:

- name: blackbox-exporter

image: harbor.yfklife.cn/public/blackbox-exporter:v0.19.0

imagePullPolicy: IfNotPresent

args:

- --config.file=/etc/blackbox_exporter/blackbox.yml

- --log.level=info

- --web.listen-address=:9115

ports:

- name: blackbox-port

containerPort: 9115

protocol: TCP

resources:

limits:

cpu: 200m

memory: 256Mi

requests:

cpu: 100m

memory: 50Mi

volumeMounts:

- name: config

mountPath: /etc/blackbox_exporter

readinessProbe:

tcpSocket:

port: 9115

initialDelaySeconds: 5

timeoutSeconds: 5

periodSeconds: 10

successThreshold: 1

failureThreshold: 3

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

- 配置 service.yaml

vi blackbox-exporter-service.yaml

kind: Service

apiVersion: v1

metadata:

name: blackbox-exporter

namespace: kube-system

spec:

selector:

app: blackbox-exporter

ports:

- name: blackbox-port

protocol: TCP

port: 9115

2

3

4

5

6

7

8

9

10

11

12

13

- 配置 ingress.yaml

vi blackbox-exporter-ingress.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: blackbox-exporter

namespace: kube-system

spec:

rules:

- host: blackbox.yfklife.cn

http:

paths:

- path: /

backend:

serviceName: blackbox-exporter

servicePort: blackbox-port

2

3

4

5

6

7

8

9

10

11

12

13

14

- 创建资源

kubectl apply -f blackbox-exporter-configmap.yaml

kubectl apply -f blackbox-exporter-deployment.yaml

kubectl apply -f blackbox-exporter-service.yaml

kubectl apply -f blackbox-exporter-ingress.yaml

2

3

4

添加域名解析到traefik,访问域名:

blackbox.yfklife.cn

如果对你有帮助,给博主买杯咖啡吧