一步步部署k8s组件(中)

一步步部署k8s组件(中)

# 部署kubelet

【192.168.14.21,192.168.14.22】 主机部署kubelet

# 签发kubelet证书

【192.168.14.200】 签发证书

注意 : 添加node节点IP,多写几个有可能安装使用的IP,如果新node的ip不在证书内,需要重新编写证书,拷贝至所有主机

cd /opt/certs && vi /opt/certs/kubelet-csr.json

{

"CN": "k8s-kubelet",

"hosts": [

"127.0.0.1",

"192.168.14.10",

"192.168.14.21",

"192.168.14.22",

"192.168.14.23",

"192.168.14.24",

"192.168.14.25",

"192.168.14.26",

"192.168.14.27",

"192.168.14.28"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "beijing",

"O": "od",

"OU": "ops"

}

]

}

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

- 生成kubelet证书和私钥

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=server kubelet-csr.json | cfssl-json -bare kubelet

# 部署kubelet节点

【192.168.14.21,192.168.14.22】 主机部署kubelet

cd /opt/kubernetes/server/bin/certs/

#上传刚刚签发的pem 证书文件【kubelet.pem kubelet-key.pem】

chmod 600 ./*-key.pem

cd /opt/kubernetes/server/bin/conf

2

3

4

5

6

7

# 创建kubelet-config 配置

- set-cluster

cd /opt/kubernetes/server/bin/conf

kubectl config set-cluster myk8s \

--certificate-authority=/opt/kubernetes/server/bin/certs/ca.pem \

--embed-certs=true \

--server=https://192.168.14.10:7443 \

--kubeconfig=kubelet.kubeconfig

2

3

4

5

- set-credentials

kubectl config set-credentials k8s-node \

--client-certificate=/opt/kubernetes/server/bin/certs/client.pem \

--client-key=/opt/kubernetes/server/bin/certs/client-key.pem \

--embed-certs=true \

--kubeconfig=kubelet.kubeconfig

2

3

4

5

- set-context

kubectl config set-context myk8s-context \

--cluster=myk8s \

--user=k8s-node \

--kubeconfig=kubelet.kubeconfig

2

3

4

- use-context

kubectl config use-context myk8s-context --kubeconfig=kubelet.kubeconfig

拷贝/opt/kubernetes/server/bin/conf/kubelet.kubeconfig 文件到kubelet节点,或重复执行创建配置4条命令

# node角色绑定

- 创建资源配置文件node.yaml

vi /opt/kubernetes/server/bin/conf/k8s-node.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: k8s-node

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:node

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: User

name: k8s-node

2

3

4

5

6

7

8

9

10

11

12

- 创建

kubectl create -f k8s-node.yaml #只需要创建一次

kubectl get clusterrolebinding k8s-node -o yaml

2

3

# 准备pause基础镜像

初始空间(net,ipc,uts 等等)

docker login harbor.yfklife.cn #登录私有仓库

#拉起基础镜像

docker pull kubernetes/pause

#打tag 上传

docker tag kubernetes/pause:latest harbor.yfklife.cn/public/pause:latest

docker push harbor.yfklife.cn/public/pause:latest

2

3

4

5

6

7

8

9

# supervisord管理kubelet服务

【192.168.14.21,192.168.14.22】 主机部署kubelet

- 创建启动脚本

vi /opt/kubernetes/server/bin/kubelet.sh

修改主机名称,和pause镜像地址

#!/bin/sh

./kubelet \

--anonymous-auth=false \

--cgroup-driver systemd \

--cluster-dns 10.254.0.2 \

--cluster-domain cluster.local \

--runtime-cgroups=/systemd/system.slice \

--kubelet-cgroups=/systemd/system.slice \

--fail-swap-on="false" \

--client-ca-file ./certs/ca.pem \

--tls-cert-file ./certs/kubelet.pem \

--tls-private-key-file ./certs/kubelet-key.pem \

--hostname-override hdss14-21.host.com \

--kubeconfig ./conf/kubelet.kubeconfig \

--log-dir /data/logs/kubernetes/kube-kubelet \

--pod-infra-container-image harbor.yfklife.cn/public/pause:latest \

--root-dir /data/kubelet

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

- 创建supervisord 配置文件

vi /etc/supervisord.d/kube-kubelet.ini

[program:kube-kubelet-14-21]

command=/opt/kubernetes/server/bin/kubelet.sh ; the program (relative uses PATH, can take args)

numprocs=1 ; number of processes copies to start (def 1)

directory=/opt/kubernetes/server/bin ; directory to cwd to before exec (def no cwd)

autostart=true ; start at supervisord start (default: true)

autorestart=true ; retstart at unexpected quit (default: true)

startsecs=30 ; number of secs prog must stay running (def. 1)

startretries=3 ; max # of serial start failures (default 3)

exitcodes=0,2 ; 'expected' exit codes for process (default 0,2)

stopsignal=QUIT ; signal used to kill process (default TERM)

stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10)

user=root ; setuid to this UNIX account to run the program

redirect_stderr=true ; redirect proc stderr to stdout (default false)

stdout_logfile=/data/logs/kubernetes/kube-kubelet/kubelet.stdout.log ; stderr log path, NONE for none; default AUTO

stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB)

stdout_logfile_backups=4 ; # of stdout logfile backups (default 10)

stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0)

stdout_events_enabled=false ; emit events on stdout writes (default false)

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

- 授权创建目录

mkdir -p /data/logs/kubernetes/kube-kubelet /data/kubelet

chmod +x /opt/kubernetes/server/bin/kubelet.sh

2

3

- 更新supervisorctl 服务

supervisorctl update

supervisorctl status

2

- 修改node标签

kubectl get nodes --show-labels

kubectl label node hdss14-21.host.com node-role.kubernetes.io/master=

kubectl label node hdss14-21.host.com node-role.kubernetes.io/node=

2

3

4

5

# 部署kube-proxy集群

连接pod网络和集群网络

# 签发kube-proxy证书

【192.168.14.200】 签发证书

- 签发生成证书签名请求(CSR)的JSON配置文件

cd /opt/certs && vi /opt/certs/kube-proxy-csr.json

{

"CN": "system:kube-proxy",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "beijing",

"O": "od",

"OU": "ops"

}

]

}

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

- 生成kube-proxy证书和私钥

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=client kube-proxy-csr.json |cfssl-json -bare kube-proxy-client

# 部署kube-proxy服务

【192.168.14.21,192.168.14.22】 主机部署kube-proxy集群

cd /opt/kubernetes/server/bin/certs

#上传刚刚签发的和之前签发的pem 2 证书文件【 kube-proxy-client-key.pem kube-proxy-client.pem】

#授权

chmod 600 ./*-key.pem

cd /opt/kubernetes/server/bin/conf

2

3

4

5

6

7

8

# 创建kube-proxy config配置

- set-cluster

cd /opt/kubernetes/server/bin/conf

kubectl config set-cluster myk8s \

--certificate-authority=/opt/kubernetes/server/bin/certs/ca.pem \

--embed-certs=true \

--server=https://192.168.14.10:7443 \

--kubeconfig=kube-proxy.kubeconfig

2

3

4

5

- set-credentials

kubectl config set-credentials kube-proxy \

--client-certificate=/opt/kubernetes/server/bin/certs/kube-proxy-client.pem \

--client-key=/opt/kubernetes/server/bin/certs/kube-proxy-client-key.pem \

--embed-certs=true \

--kubeconfig=kube-proxy.kubeconfig

2

3

4

5

- set-context

kubectl config set-context myk8s-context \

--cluster=myk8s \

--user=kube-proxy \

--kubeconfig=kube-proxy.kubeconfig

2

3

4

- use-context

kubectl config use-context myk8s-context --kubeconfig=kube-proxy.kubeconfig

拷贝/opt/kubernetes/server/bin/conf/kubelet.kubeconfig 文件到kubelet节点,或重复执行创建配置4条命令

# 加载ipvs模块

- 添加模块加载脚本

cd /opt && vi ./ipvs.sh

#!/bin/bash

ipvs_mods_dir="/usr/lib/modules/$(uname -r)/kernel/net/netfilter/ipvs"

for i in $(ls $ipvs_mods_dir|grep -o "^[^.]*")

do

/sbin/modinfo -F filename $i &>/dev/null

if [ $? -eq 0 ];then

/sbin/modprobe $i

fi

done

2

3

4

5

6

7

8

9

- 检查是否正常

chmod +x /opt/ipvs.sh

/opt/ipvs.sh

lsmod |grep ip_vs

2

3

4

5

- 添加开机自启动

echo '/opt/ipvs.sh' >> /etc/rc.d/rc.local

chmod +x /etc/rc.d/rc.local

ln -s /usr/lib/systemd/system/rc-local.service /etc/systemd/system/multi-user.target.wants/rc-local.service

systemctl enable rc-local.service #默认rc.local 未开机自启动

2

3

4

5

6

7

# cat /usr/lib/systemd/system/rc-local.service

[Unit]

Description=/etc/rc.d/rc.local Compatibility

ConditionFileIsExecutable=/etc/rc.d/rc.local

After=network.target

[Service]

Type=forking

ExecStart=/etc/rc.d/rc.local start

TimeoutSec=0

RemainAfterExit=yes

2

3

4

5

6

7

8

9

10

11

12

# supervisord管理kube-proxy服务

【192.168.14.21,192.168.14.22】 主机部署kubelet

- 创建kube-proxy启动脚本

vi /opt/kubernetes/server/bin/kube-proxy.sh

注意

1.ipvs 不支持NodePort型Service

2.修改主机名称

#!/bin/sh

./kube-proxy \

--cluster-cidr 172.7.0.0/16 \

--hostname-override hdss14-21.host.com \

--proxy-mode=ipvs \

--ipvs-scheduler=nq \

--kubeconfig ./conf/kube-proxy.kubeconfig

2

3

4

5

6

7

- 创建supervisord 配置文件

vi /etc/supervisord.d/kube-proxy.ini

[program:kube-proxy-14-21]

command=/opt/kubernetes/server/bin/kube-proxy.sh ; the program (relative uses PATH, can take args)

numprocs=1 ; number of processes copies to start (def 1)

directory=/opt/kubernetes/server/bin ; directory to cwd to before exec (def no cwd)

autostart=true ; start at supervisord start (default: true)

autorestart=true ; retstart at unexpected quit (default: true)

startsecs=30 ; number of secs prog must stay running (def. 1)

startretries=3 ; max # of serial start failures (default 3)

exitcodes=0,2 ; 'expected' exit codes for process (default 0,2)

stopsignal=QUIT ; signal used to kill process (default TERM)

stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10)

user=root ; setuid to this UNIX account to run the program

redirect_stderr=true ; redirect proc stderr to stdout (default false)

stdout_logfile=/data/logs/kubernetes/kube-proxy/proxy.stdout.log ; stderr log path, NONE for none; default AUTO

stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB)

stdout_logfile_backups=4 ; # of stdout logfile backups (default 10)

stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0)

stdout_events_enabled=false ; emit events on stdout writes (default false)

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

- 授权,创建日志目录

chmod +x /opt/kubernetes/server/bin/kube-proxy.sh

mkdir -p /data/logs/kubernetes/kube-proxy

2

3

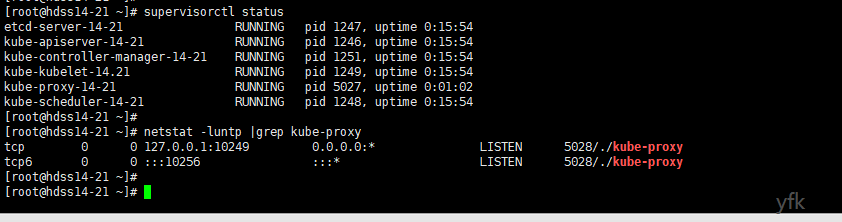

- 更新supervisorctl 服务

supervisorctl update

supervisorctl status

netstat -luntp |grep kube-proxy

2

3

4

5

- 检查调度是否正常

yum install -y ipvsadm

ipvsadm -Ln

2

3

# 验证集群,启动第一个pod

- 创建POD yaml文件

cd /root && vi nginx-ds.yaml

apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

name: nginx-ds

spec:

template:

metadata:

labels:

app: nginx-ds

spec:

containers:

- name: my-nginx

#修改镜像地址

image: harbor.yfklife.cn/public/nginx:1.13.6

ports:

- containerPort: 80

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

启动

kubectl create -f nginx-ds.yaml

- 创建Service yaml文件

cd /root && vi nginx-svc-ds.yaml

apiVersion: v1

kind: Service

metadata:

labels:

app: nginx-ds

name: nginx-ds

namespace: default

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

app: nginx-ds

type: ClusterIP

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

启动service

kubectl create -f nginx-svc-ds.yaml

检查

kubectl get pod -owide

kubectl get cs

kubectl get svc

2

3

4

5