一步步部署k8s组件(上)

一步步部署k8s组件(上)

# 部署ETCD

# 签发ETCD证书

【192.168.14.200】 签发证书

# ca根证书文件ca

cd /opt/certs && vi /opt/certs/ca-config.json

{

"signing": {

"default": {

"expiry": "175200h"

},

"profiles": {

"server": {

"expiry": "175200h",

"usages": [

"signing",

"key encipherment",

"server auth"

]

},

"client": {

"expiry": "175200h",

"usages": [

"signing",

"key encipherment",

"client auth"

]

},

"peer": {

"expiry": "175200h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

- 证书类型

| 类型名称 | 说明 |

|---|---|

| client | 客户端使用,用于服务端认证客户端,例如etcdctl,etcd proxy,fleectl docker 客户端 |

| server | 服务端使用,客户端以此验证服务端身份,例如docke 服务端,kube-apiserver |

| peer | 双向证书,用于etcd集群成员间通信 |

# 双向证书文件peer

vi /opt/certs/etcd-peer-csr.json

IP地址为可能部署etcd的IP主机,多预备一个IP

{

"CN": "k8s-etcd",

"hosts": [

"192.168.14.11",

"192.168.14.12",

"192.168.14.21",

"192.168.14.22"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "beijing",

"O": "od",

"OU": "ops"

}

]

}

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

# 签发etcd证书

- 生成etcd证书和私钥

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=peer etcd-peer-csr.json |cfssl-json -bare etcd-peer

# 部署etcd服务

要求节点数为奇数个

【192.168.14.12,192.168.14.21,192.168.14.22】 主机部署ETCD集群

etcd下载链接地址🤞🤞 (opens new window)

- 创建用户

useradd -s /sbin/nologin -M etcd

- 下载etcd包,解压

cd /opt/soft #下载包

tar xfv etcd-v3.1.20-linux-amd64.tar.gz -C /opt/

mv /opt/etcd-v3.1.20-linux-amd64 /opt/etcd-v3.1.20

ln -s /opt/etcd-v3.1.20 /opt/etcd

mkdir -p /opt/etcd/certs /data/etcd /data/logs/etcd-server

cd /opt/etcd/certs

#从 192.168.14.200 拷贝【 ca.pem etcd-peer-key.pem etcd-peer.pem 】到当前目录(/opt/etcd/certs)

2

3

4

5

6

7

8

9

10

11

12

- 配置etcd启动脚本

vi /opt/etcd/etcd-server-startup.sh

注意 修改对应的IP和名称,192.168.14.12,192.168.14.21,192.168.14.22,

#!/bin/sh

./etcd --name etcd-server-14-12 \

--data-dir /data/etcd/etcd-server \

--listen-peer-urls https://192.168.14.12:2380 \

--listen-client-urls https://192.168.14.12:2379,http://127.0.0.1:2379 \

--quota-backend-bytes 8000000000 \

--initial-advertise-peer-urls https://192.168.14.12:2380 \

--advertise-client-urls https://192.168.14.12:2379,http://127.0.0.1:2379 \

--initial-cluster etcd-server-14-12=https://192.168.14.12:2380,etcd-server-14-21=https://192.168.14.21:2380,etcd-server-14-22=https://192.168.14.22:2380 \

--ca-file ./certs/ca.pem \

--cert-file ./certs/etcd-peer.pem \

--key-file ./certs/etcd-peer-key.pem \

--client-cert-auth \

--trusted-ca-file ./certs/ca.pem \

--peer-ca-file ./certs/ca.pem \

--peer-cert-file ./certs/etcd-peer.pem \

--peer-key-file ./certs/etcd-peer-key.pem \

--peer-client-cert-auth \

--peer-trusted-ca-file ./certs/ca.pem \

--log-output stdout

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

- 授权

chmod 600 etcd-peer-key.pem

chown -R etcd.etcd /opt/etcd-v3.1.20/ /data/etcd /data/logs/etcd-server /opt/etcd/certs

chmod +x /opt/etcd/etcd-server-startup.sh

2

3

4

5

# supervisord管理etcd服务

- 安装启动

yum install supervisor -y

systemctl start supervisord

systemctl enable supervisord

2

3

- 配置etcd的supervisor.ini文件

vi /etc/supervisord.d/etcd-server.ini

[program:etcd-server-14-12]

command=/opt/etcd/etcd-server-startup.sh ; the program (relative uses PATH, can take args)

numprocs=1 ; number of processes copies to start (def 1)

directory=/opt/etcd ; directory to cwd to before exec (def no cwd)

autostart=true ; start at supervisord start (default: true)

autorestart=true ; retstart at unexpected quit (default: true)

startsecs=30 ; number of secs prog must stay running (def. 1)

startretries=3 ; max # of serial start failures (default 3)

exitcodes=0,2 ; 'expected' exit codes for process (default 0,2)

stopsignal=QUIT ; signal used to kill process (default TERM)

stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10)

user=etcd ; setuid to this UNIX account to run the program

redirect_stderr=true ; redirect proc stderr to stdout (default false)

stdout_logfile=/data/logs/etcd-server/etcd.stdout.log ; stdout log path, NONE for none; default AUTO

stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB)

stdout_logfile_backups=4 ; # of stdout logfile backups (default 10)

stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0)

stdout_events_enabled=false ; emit events on stdout writes (default false)

killasgroup=true

stopasgroup=true

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

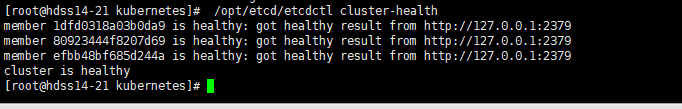

- 更新supervisorctl 服务

supervisorctl update

supervisorctl status

netstat -luntp|grep etcd

/opt/etcd/etcdctl cluster-health

2

3

4

5

[root@hdss14-12 certs]# supervisorctl update

etcd-server-14-12: added process group

[root@hdss14-12 certs]#

[root@hdss14-12 certs]# #检查

[root@hdss14-12 certs]# supervisorctl status

etcd-server-14-12 RUNNING pid 943, uptime 0:43:04

[root@hdss14-12 certs]#

[root@hdss14-12 certs]# netstat -luntp|grep etcd

tcp 0 0 192.168.14.12:2379 0.0.0.0:* LISTEN 1087/./etcd

tcp 0 0 127.0.0.1:2379 0.0.0.0:* LISTEN 1087/./etcd

tcp 0 0 192.168.14.12:2380 0.0.0.0:* LISTEN 1087/./etcd

2

3

4

5

6

7

8

9

10

11

12

# 服务器断电,etcd集群小于n/2

- panic: invalid freelist page: 3487582951472510517, page type is branch:报错信息

查看etcd服务的时候,发现三个节点,只有一个节点A是运行的,其余两个节点BC是无法启动

**生产环境删除需谨慎,建议不这么恢复**

尝试解决方式:我把B 和 C 的data目录数据删除了,A 还是正常运行的状态,还是无法启动B 和C( 删除(或者移走)数据你可以先尝试不删除,先把A 停止,去启动B和C,看看是否可启动)

最后我是:把正常运行A的停止,B 和 C 的data目录数据删除,把不能正常运行的B和C,先执行启动命令,再把之前手动停止的A启动

- 恢复集群

在某种特殊情况下,导致A起不来,需要重新配置集群信息,和业务的部署

所以node节点

#检查node节点

kubectl get nodes

#检查主控服务

kubectl get cs

#添加flanneld网络

/opt/etcd/etcdctl set /coreos.com/network/config '{"network": "172.14.0.0/16","backend": {"Type": "host-gw"}}'

#查看

/opt/etcd/etcdctl get /coreos.com/network/config

cd /opt/kubernetes/server/bin/conf

#配置kubelet

kubectl config set-cluster myk8s --certificate-authority=/opt/kubernetes/server/bin/certs/ca.pem --embed-certs=true --server=https://192.168.14.10:7443 --kubeconfig=kubelet.kubeconfig

kubectl config set-credentials k8s-node --client-certificate=/opt/kubernetes/server/bin/certs/client.pem --client-key=/opt/kubernetes/server/bin/certs/client-key.pem --embed-certs=true --kubeconfig=kubelet.kubeconfig

kubectl config set-context myk8s-context --cluster=myk8s --user=k8s-node --kubeconfig=kubelet.kubeconfig

kubectl config use-context myk8s-context --kubeconfig=kubelet.kubeconfig

kubectl create -f k8s-node.yaml

kubectl get nodes --show-labels

#配置kube-proxy

kubectl config set-cluster myk8s --certificate-authority=/opt/kubernetes/server/bin/certs/ca.pem --embed-certs=true --server=https://192.168.14.10:7443 --kubeconfig=kube-proxy.kubeconfig

kubectl config set-credentials kube-proxy --client-certificate=/opt/kubernetes/server/bin/certs/kube-proxy-client.pem --client-key=/opt/kubernetes/server/bin/certs/kube-proxy-client-key.pem --embed-certs=true --kubeconfig=kube-proxy.kubeconfig

kubectl config set-context myk8s-context --cluster=myk8s --user=kube-proxy --kubeconfig=kube-proxy.kubeconfig

kubectl config use-context myk8s-context --kubeconfig=kube-proxy.kubeconfig

#coredns,traefik,prometheys,grafana,kafka

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

# 部署kube-apiserver集群

【192.168.14.21,192.168.14.22】 主机部署kube-apiserver集群

【192.168.14.11,192.168.14.12】 主机部署L4,keepalived 高可用(vip:192.168.14.10)

github下载地址🤞🤞 (opens new window)

v1.22.github下载地址🤞🤞 (opens new window) CHANGELOG

- 下载kube-server包,解压,部署

cd /opt/soft #下载包

tar xf kubernetes-server-linux-amd64-v1.15.2.tar.gz -C /opt/

#做软链接

mv /opt/kubernetes/ /opt/kubernetes-v1.15.2

ln -s /opt/kubernetes-v1.15.2/ /opt/kubernetes

#删除无用的包,文件

cd /opt/kubernetes

rm -f kubernetes-src.tar.gz #删除源码包

cd server/bin/ #删除没用的文件docker镜像等

rm -rf *.tar

rm -rf *_tag

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

# 签发apiserver-client证书

【192.168.14.200】 签发证书

# 签发apiserver-client证书

apiserver与etcd通信用的证书。apiserver是客户端,etcd是服务端

cd /opt/certs &&vi /opt/certs/client-csr.json

{

"CN": "k8s-node",

"hosts": [

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "beijing",

"O": "od",

"OU": "ops"

}

]

}

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

- 生成client证书和私钥

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=client client-csr.json |cfssl-json -bare client

# 签发apiserver证书

vi /opt/certs/apiserver-csr.json

【192.168.14.10】 VIP

【192.168.14.21,22,23】 api-server可能存在的IP地址

{

"CN": "k8s-apiserver",

"hosts": [

"127.0.0.1",

"10.254.0.1",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local",

"192.168.14.10",

"192.168.14.21",

"192.168.14.22",

"192.168.14.23"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "beijing",

"O": "od",

"OU": "ops"

}

]

}

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

- 生成apiserver证书和私钥

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=server apiserver-csr.json |cfssl-json -bare apiserver

# 部署apiserver服务

【192.168.14.21,192.168.14.22】 主机部署apiserver集群

test -d /opt/kubernetes/server/bin/certs/ || mkdir -p /opt/kubernetes/server/bin/{certs,conf}

cd /opt/kubernetes/server/bin/certs

#上传刚刚签发的和之前签发的pem 6 证书文件【apiserver-key.pem apiserver.pem ca-key.pem ca.pem client-key.pem client.pem】

#授权

chmod 600 ./*-key.pem

2

3

4

5

6

7

- 添加apiserver日志审计规则yaml

cd /opt/kubernetes/server/bin/conf && vi audit.yaml

apiVersion: audit.k8s.io/v1beta1 # This is required.

kind: Policy

# Don't generate audit events for all requests in RequestReceived stage.

omitStages:

- "RequestReceived"

rules:

# Log pod changes at RequestResponse level

- level: RequestResponse

resources:

- group: ""

# Resource "pods" doesn't match requests to any subresource of pods,

# which is consistent with the RBAC policy.

resources: ["pods"]

# Log "pods/log", "pods/status" at Metadata level

- level: Metadata

resources:

- group: ""

resources: ["pods/log", "pods/status"]

# Don't log requests to a configmap called "controller-leader"

- level: None

resources:

- group: ""

resources: ["configmaps"]

resourceNames: ["controller-leader"]

# Don't log watch requests by the "system:kube-proxy" on endpoints or services

- level: None

users: ["system:kube-proxy"]

verbs: ["watch"]

resources:

- group: "" # core API group

resources: ["endpoints", "services"]

# Don't log authenticated requests to certain non-resource URL paths.

- level: None

userGroups: ["system:authenticated"]

nonResourceURLs:

- "/api*" # Wildcard matching.

- "/version"

# Log the request body of configmap changes in kube-system.

- level: Request

resources:

- group: "" # core API group

resources: ["configmaps"]

# This rule only applies to resources in the "kube-system" namespace.

# The empty string "" can be used to select non-namespaced resources.

namespaces: ["kube-system"]

# Log configmap and secret changes in all other namespaces at the Metadata level.

- level: Metadata

resources:

- group: "" # core API group

resources: ["secrets", "configmaps"]

# Log all other resources in core and extensions at the Request level.

- level: Request

resources:

- group: "" # core API group

- group: "extensions" # Version of group should NOT be included.

# A catch-all rule to log all other requests at the Metadata level.

- level: Metadata

# Long-running requests like watches that fall under this rule will not

# generate an audit event in RequestReceived.

omitStages:

- "RequestReceived"

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

- apiserver添加启动脚本

vi /opt/kubernetes/server/bin/kube-apiserver.sh

查看参数帮助:/opt/kubernetes/server/bin/kube-apiserver --help

#!/bin/bash

./kube-apiserver \

--apiserver-count 2 \

--audit-log-path /data/logs/kubernetes/kube-apiserver/audit-log \

--audit-policy-file ./conf/audit.yaml \

--authorization-mode RBAC \

--client-ca-file ./certs/ca.pem \

--requestheader-client-ca-file ./certs/ca.pem \

--enable-admission-plugins NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,MutatingAdmissionWebhook,ValidatingAdmissionWebhook,ResourceQuota \

--etcd-cafile ./certs/ca.pem \

--etcd-certfile ./certs/client.pem \

--etcd-keyfile ./certs/client-key.pem \

--etcd-servers https://192.168.14.12:2379,https://192.168.14.21:2379,https://192.168.14.22:2379 \

--service-account-key-file ./certs/ca-key.pem \

--service-cluster-ip-range 10.254.0.0/16 \

--service-node-port-range 3000-29999 \

--target-ram-mb=1024 \

--kubelet-client-certificate ./certs/client.pem \

--kubelet-client-key ./certs/client-key.pem \

--log-dir /data/logs/kubernetes/kube-apiserver \

--tls-cert-file ./certs/apiserver.pem \

--tls-private-key-file ./certs/apiserver-key.pem \

--v 2

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

# supervisord管理apiserver服务

vi /etc/supervisord.d/kube-apiserver.ini

[program:kube-apiserver-14-21] ; 根据实际IP地址更改

command=/opt/kubernetes/server/bin/kube-apiserver.sh ; the program (relative uses PATH, can take args)

numprocs=1 ; number of processes copies to start (def 1)

directory=/opt/kubernetes/server/bin ; directory to cwd to before exec (def no cwd)

autostart=true ; start at supervisord start (default: true)

autorestart=true ; retstart at unexpected quit (default: true)

startsecs=30 ; number of secs prog must stay running (def. 1)

startretries=3 ; max # of serial start failures (default 3)

exitcodes=0,2 ; 'expected' exit codes for process (default 0,2)

stopsignal=QUIT ; signal used to kill process (default TERM)

stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10)

user=root ; setuid to this UNIX account to run the program

redirect_stderr=true ; redirect proc stderr to stdout (default false)

stdout_logfile=/data/logs/kubernetes/kube-apiserver/apiserver.stdout.log ; stderr log path, NONE for none; default AUTO

stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB)

stdout_logfile_backups=4 ; # of stdout logfile backups (default 10)

stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0)

stdout_events_enabled=false ; emit events on stdout writes (default false)

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

- 创建api-server audit 日志目录,添加脚本执行权限

chmod +x /opt/kubernetes/server/bin/kube-apiserver.sh

mkdir -p /data/logs/kubernetes/kube-apiserver

2

- 更新supervisorctl 服务

supervisorctl update

supervisorctl status

netstat -luntp|grep kube-api

2

3

4

5

[root@hdss14-21 bin]# supervisorctl update

[root@hdss14-21 bin]#

[root@hdss14-21 bin]# supervisorctl status

etcd-server-14-21 RUNNING pid 1665, uptime 0:13:04

kube-apiserver-14-21 RUNNING pid 1837, uptime 0:06:15

[root@hdss14-21 bin]#

[root@hdss14-21 bin]# netstat -luntp|grep kube-api

tcp 0 0 127.0.0.1:8080 0.0.0.0:* LISTEN 1838/./kube-apiserv

tcp6 0 0 :::6443 :::* LISTEN 1838/./kube-apiserv

2

3

4

5

6

7

8

9

10

# Nginx-L4代理apiserver

【192.168.14.11,192.168.14.12】 主机部署Nginx 和keepalived (虚拟VIP:192.168.14.10)

- 安装Nginx,配置

nginx 安装建议版本:1.16 以上

yum install -y nginx

mkdir /etc/nginx/stream.d

2

3

在vi /etc/nginx/nginx.conf http上一层添加

include /etc/nginx/stream.d/*.conf;

- 添加stream 配置,监听7443端口

vi /etc/nginx/stream.d/apiserver.conf

stream {

upstream kube-apiserver {

server 192.168.14.21:6443 max_fails=3 fail_timeout=30s;

server 192.168.14.22:6443 max_fails=3 fail_timeout=30s;

}

server {

listen 7443;

proxy_connect_timeout 2s;

proxy_timeout 900s;

proxy_pass kube-apiserver;

}

}

2

3

4

5

6

7

8

9

10

11

12

- 检查配置,启动nginx

nginx -t

systemctl start nginx

systemctl enable nginx

2

3

4

5

- 安装与配置keepalived

yum install keepalived -y

- 编写监听脚本

vi /etc/keepalived/check_port.sh

#!/bin/bash

CHK_PORT=$1

if [ -n "$CHK_PORT" ];then

PORT_PROCESS=`ss -lnt|grep $CHK_PORT|wc -l`

if [ $PORT_PROCESS -eq 0 ];then

echo "Port $CHK_PORT Is Not Used,End."

exit 1

fi

else

echo "Check Port Cant Be Empty!"

fi

2

3

4

5

6

7

8

9

10

11

12

Keepalived 主(192.168.14.11)

vi /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id 192.168.14.11

script_user root

enable_script_security

}

vrrp_script chk_nginx {

script "/etc/keepalived/check_port.sh 7443"

interval 2

weight -20

}

vrrp_instance VI_1 {

state BACKUP

interface eth0 # 根据实际网卡更改

virtual_router_id 251

priority 100

advert_int 1

mcast_src_ip 192.168.14.11

nopreempt #非抢占式

authentication {

auth_type PASS

auth_pass 11111111

}

track_script {

chk_nginx

}

virtual_ipaddress {

192.168.14.10

}

}

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

Keepalived 备(192.168.14.12)

vi /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id 192.168.14.12

script_user root

enable_script_security

}

vrrp_script chk_nginx {

script "/etc/keepalived/check_port.sh 7443"

interval 2

weight -20

}

vrrp_instance VI_1 {

state BACKUP

interface eth0 # 根据实际网卡更改

virtual_router_id 251

priority 90

advert_int 1

mcast_src_ip 192.168.14.12

nopreempt

authentication {

auth_type PASS

auth_pass 11111111

}

track_script {

chk_nginx

}

virtual_ipaddress {

192.168.14.10

}

}

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

- 启动keepalived

先启动主,再启动备

chmod +x /etc/keepalived/check_port.sh

systemctl start keepalived

systemctl enable keepalived

ip a |grep 192.168.14.10

2

3

4

5

6

检查vip是否正常漂移,

1.停止11 上的nginx,vip是否漂移到12 2.启动11上的nginx,因为nopreempt 参数,vip不会立即漂移到11 3.重启12上的keepalived ,vip检查是否漂移到11

# 部署kube-controller-manager

【192.168.14.21,192.168.14.22】 主机部署Nginx 和keepalived (虚拟VIP:192.168.14.10)

- 添加启动脚本

vi /opt/kubernetes/server/bin/kube-controller-manager.sh

#!/bin/sh

./kube-controller-manager \

--cluster-cidr 172.7.0.0/16 \

--leader-elect true \

--log-dir /data/logs/kubernetes/kube-controller-manager \

--master http://127.0.0.1:8080 \

--service-account-private-key-file ./certs/ca-key.pem \

--service-cluster-ip-range 10.254.0.0/16 \

--root-ca-file ./certs/ca.pem \

--v 2

2

3

4

5

6

7

8

9

10

11

# supervisord管理kube-conntroller-manager服务

vi /etc/supervisord.d/kube-conntroller-manager.ini

[program:kube-controller-manager-14-21]

command=/opt/kubernetes/server/bin/kube-controller-manager.sh ; the program (relative uses PATH, can take args)

numprocs=1 ; number of processes copies to start (def 1)

directory=/opt/kubernetes/server/bin ; directory to cwd to before exec (def no cwd)

autostart=true ; start at supervisord start (default: true)

autorestart=true ; retstart at unexpected quit (default: true)

startsecs=30 ; number of secs prog must stay running (def. 1)

startretries=3 ; max # of serial start failures (default 3)

exitcodes=0,2 ; 'expected' exit codes for process (default 0,2)

stopsignal=QUIT ; signal used to kill process (default TERM)

stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10)

user=root ; setuid to this UNIX account to run the program

redirect_stderr=true ; redirect proc stderr to stdout (default false)

stdout_logfile=/data/logs/kubernetes/kube-controller-manager/controller.stdout.log ; stderr log path, NONE for none; default AUTO

stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB)

stdout_logfile_backups=4 ; # of stdout logfile backups (default 10)

stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0)

stdout_events_enabled=false ; emit events on stdout writes (default false)

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

- 创建controller日志目录,授权脚本

mkdir -p /data/logs/kubernetes/kube-controller-manager

chmod +x /opt/kubernetes/server/bin/kube-controller-manager.sh

2

3

- 更新supervisorctl 服务

supervisorctl update

supervisorctl status

2

# 部署kube-scheduler

【192.168.14.21,192.168.14.22】 主机部署Nginx 和keepalived (虚拟VIP:192.168.14.10)

- 添加启动脚本

vi /opt/kubernetes/server/bin/kube-scheduler.sh

#!/bin/sh

./kube-scheduler \

--leader-elect \

--log-dir /data/logs/kubernetes/kube-scheduler \

--master http://127.0.0.1:8080 \

--v 2

2

3

4

5

6

7

# supervisord管理kube-scheduler服务

vi /etc/supervisord.d/kube-scheduler.ini

[program:kube-scheduler-14-21]

command=/opt/kubernetes/server/bin/kube-scheduler.sh ; the program (relative uses PATH, can take args)

numprocs=1 ; number of processes copies to start (def 1)

directory=/opt/kubernetes/server/bin ; directory to cwd to before exec (def no cwd)

autostart=true ; start at supervisord start (default: true)

autorestart=true ; retstart at unexpected quit (default: true)

startsecs=30 ; number of secs prog must stay running (def. 1)

startretries=3 ; max # of serial start failures (default 3)

exitcodes=0,2 ; 'expected' exit codes for process (default 0,2)

stopsignal=QUIT ; signal used to kill process (default TERM)

stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10)

user=root ; setuid to this UNIX account to run the program

redirect_stderr=true ; redirect proc stderr to stdout (default false)

stdout_logfile=/data/logs/kubernetes/kube-scheduler/scheduler.stdout.log ; stderr log path, NONE for none; default AUTO

stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB)

stdout_logfile_backups=4 ; # of stdout logfile backups (default 10)

stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0)

stdout_events_enabled=false ; emit events on stdout writes (default false)

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

- 创建scheduler日志目录,添加脚本执行权限

chmod +x /opt/kubernetes/server/bin/kube-scheduler.sh

mkdir -p /data/logs/kubernetes/kube-scheduler

2

3

- 更新supervisorctl 服务

supervisorctl update

supervisorctl status

2

- 添加kubeclt命令软链接

ln -s /opt/kubernetes/server/bin/kubectl /usr/bin/kubectl

- 检查服务

kubectl get cs

# 个人存储下载地址。。。

#etcd

etcd-v3.1.20-linux-amd64.tar.gz

#kube-server

kubernetes-server-linux-amd64-v1.15.2.tar.gz

2

3

4

5

6